Author: Brenton

-

Microsoft Teams Premium now available

Microsoft this week announced that the new Microsoft Teams Premium license is now generally available for customers around the world. Teams Premium adds a number of features focused primarily around making Teams more personalised and intelligent. That includes: Microsoft also added the ability to setup virtual appointments with ease for Teams Premium customers. “Whether you’re…

-

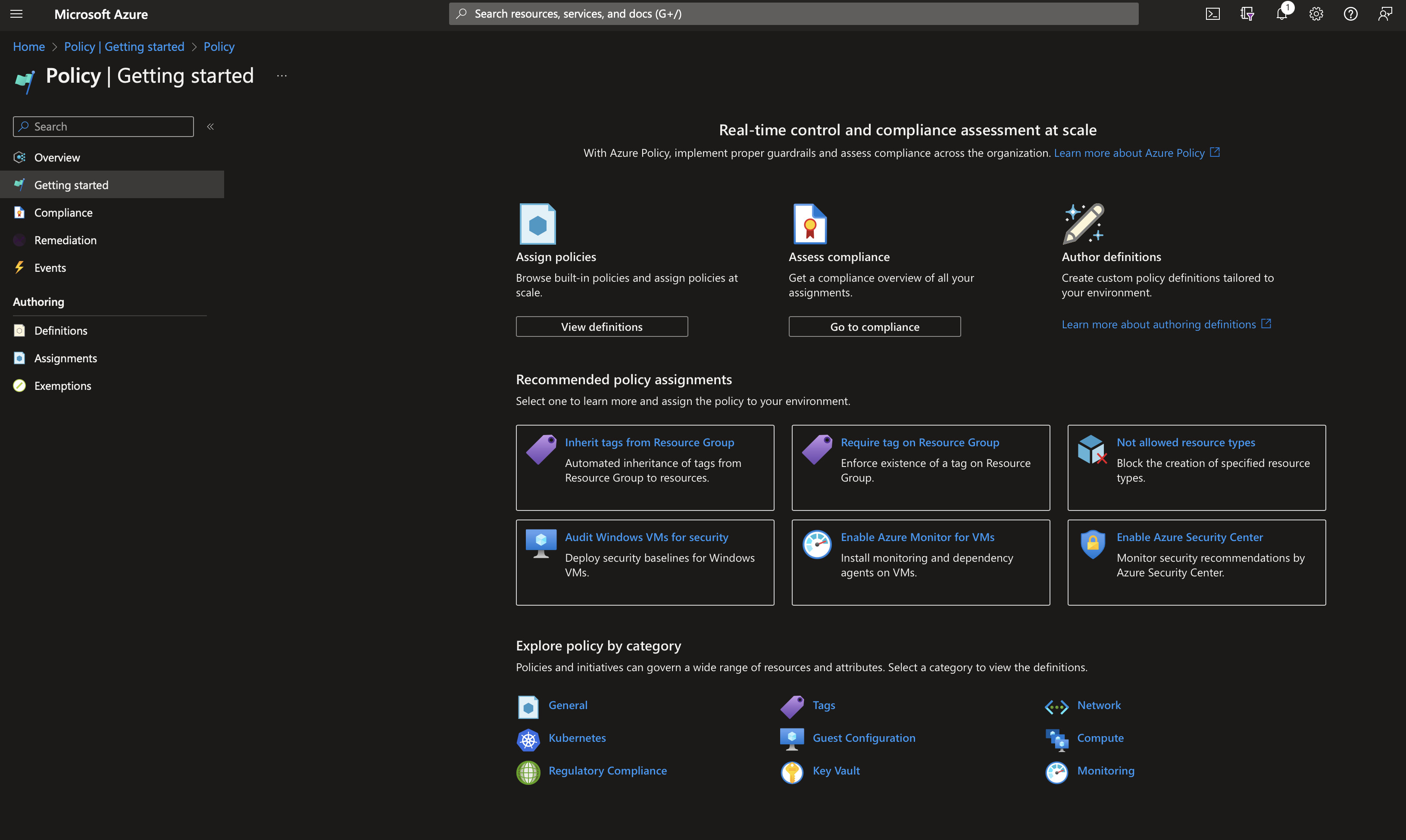

Getting started with Azure Policies

Once you start adopting a cloud service such as Microsoft Azure, one of the first tasks should be looking at how to enforce organisational and best-practice policies at scale when resources are being created.

-

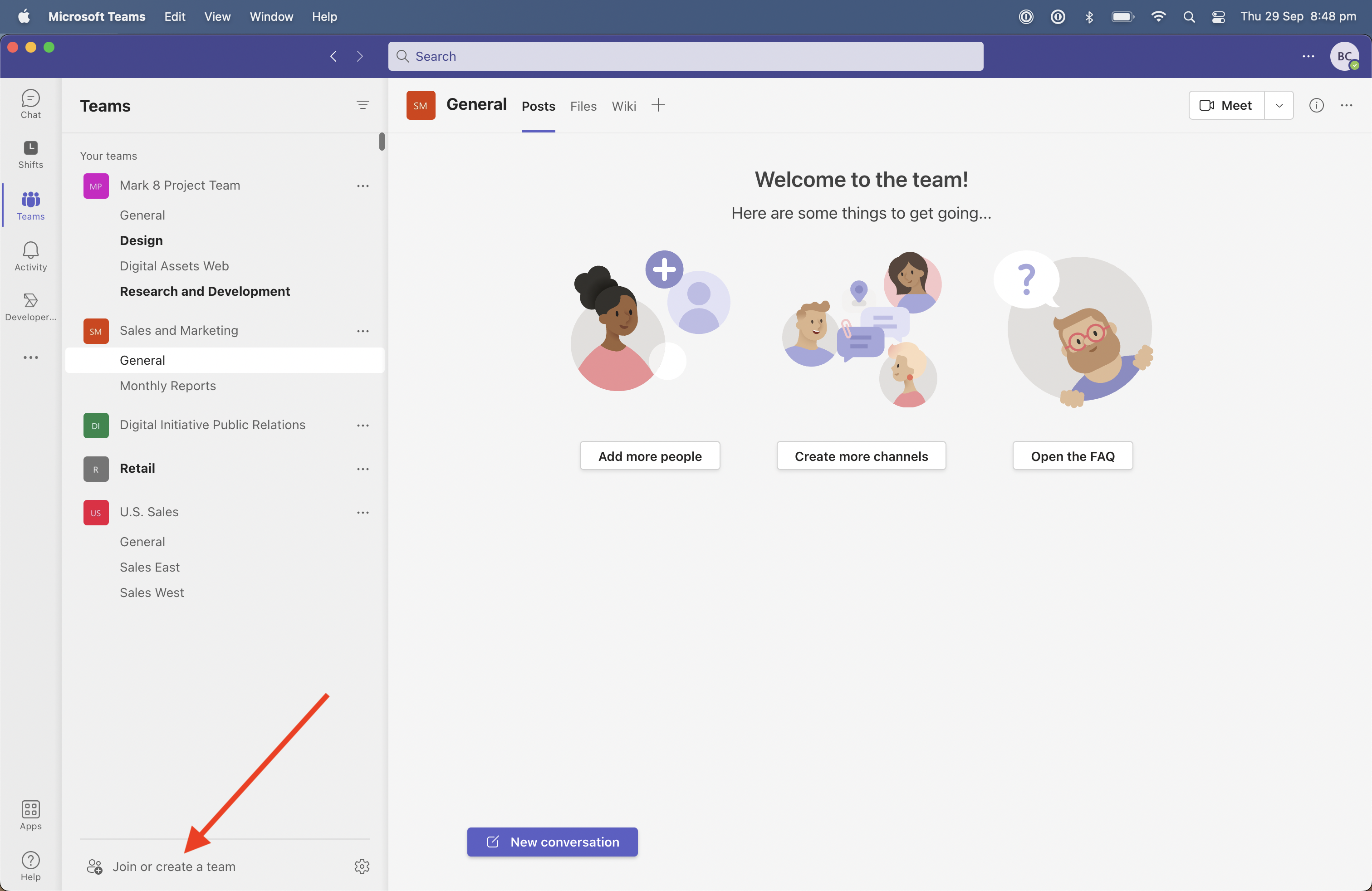

How to create a team in Microsoft Teams

In this post, we’ll take a look at how you can quickly create a team in Microsoft Teams.

-

Microsoft Teams Rooms Pro introduced

Microsoft has announced a series of changes for those using Microsoft Teams Rooms, including the addition of a new “Pro” plan. The Pro plan offers a number of benefits over the free “Basic” plan, including: Camera & audio AI enhancements to ensure an optimal experience for those dialling in to hybrid meetings. Includes features to…

-

Microsoft Teams Linux client to be retired

Hacker News: We will be retiring the Microsoft Teams desktop client on Linux in 90 days (early December). We hear from you that you want the full richness of Microsoft Teams features on Linux such as background effects, reactions, gallery view, etc. We found the best way to act on this is to offer a…

-

Microsoft announces Teams App Camp

Microsoft 365 Developer Blog: Teams App Camp is an on-demand workshop complete with videos and hands-on labs in which you’ll extend a simple web application to become a full-featured Microsoft Teams application, complete with a sample license service based on Microsoft’s Commercial Marketplace. It doesn’t assume any prior Microsoft knowledge. All the code is in…